People really hate AI. This is to be expected because it’s shaking things up enormously, and everyone hates that. We don’t want change, we want things to be nice, and we want them to stay the same. We’ll only be happy when we find our way to the little house from the Frog and Toad books, and live there for eternity. No more insane politics, Silicon Valley “disruptors”, or issues for us to take a stance on. Just this, in perpetuity.

Too much to ask?

I get the pushback - but I also find the discourse frustrating. Most of us can’t grasp the gravity of what’s happening, and so we’ve latched onto talking points that are quite trivial. What’s the water consumption of data centers? Does using AI at work make us lazy? Is it immoral to use AI images? Real issues, sure, but I think there’s a reason we don’t see industry insiders talk about them much, and that’s because there are bigger fish to fry. They tend to be thinking “Is it going to kill everyone?”, or “How do we structure our economy when all labour is free?”. It seems a bit silly to worry about Studio Ghibli pictures when we could be a few years away from an extinction level series of drone strikes, or a post scarcity utopia.

One consequence of the discomfort people feel around AI and the general distaste they have for it, is an attempt to undermine it’s capabilities. Models often get described as stochastic parrots, and as lacking the ability to “truly understand” anything (whatever that means). There’s a misconception that AI models work by reading the internet, and then simply “predicting the next word” after you ask it a question. As if it’s an amalgamation of all of us, trained by all our text, that thinks “What would the average person on the internet say now?” when you ask it to spell check your emails. This view is oversimplified.

Reply. Reinforce. Retrain.

The above scenario is how models start, sure. Their pre-training consists of reading the internet, and learning what the hell words are in the first place. However, this may come as a surprise to you, but if you ask your median internet user anything, you will probably not get a useful answer. If AI models were really just reading the internet and predicting the next word, GPT would likely be calling us misspelled racial slurs a lot more often.

What makes AI models actually useful is Reinforcement Learning. This is where we carrot and stick them into doing what we want. The process is pretty simple.

We ask the model something and it takes a stab at it.

We ask people which answers are the most useful (you may have even seen this in GPT yourself. Sometimes it gives you two answers and you choose the best one).

We reinforce the model to output more answers like the winners, and fewer answers like the losers.

After reinforcement learning, this means the AI model is no longer just predicting what the next word is in a sentence, it’s predicting what we want to hear. When people say AI is predicting the next word, they make it out as if it thinks like Michael Scott.

However, it’s more like what we do at job interviews. When they ask us to tell them about ourselves, we know to talk about our hobbies and interests, and not about our small intestines or our first sexual experience.

Newer reasoning models go one step further. Models like o3-mini-high, Claude Sonnet 3.7, and Grok 3 (god they’re so bad at naming models) aren’t just reinforced on their output. They’re reinforced on their working out. Exactly the same way we were in maths class.

The basic premise is very similar to before.

Ask a model a question with a single answer (Like a maths problem).

Make it show it’s work by showing it’s chain of thought.

If the chain of thought is sensible, and the answer’s correct, reinforce it.

Now it’s not only trying to predict what we want to hear, but it knows how we go about reasoning. This is handy, because reasoning is a basic building block for enquiry. If you know how to reason, you can find your way to all sorts of new knowledge, meaning AI can now in principle discover new information. They’re just still too dumb to do that reliably, for now.

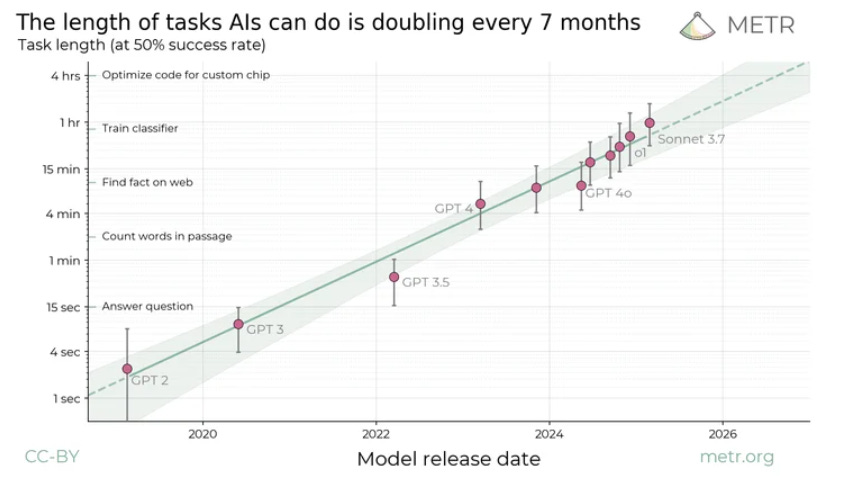

The reason we couldn’t do this until recently is because we hadn’t scaled compute up enough. However, as we build bigger models and spend more compute on inference (the part where it stops to think step-by-step), models are getting better at long term tasks. In fact, the length of time they can work productively is doubling every 7 months.

If you don’t understand the significance of this, google “emperor rice grains chessboard”.

This brings me to the next thought I have when people bring up stochastic parrots - so what? People regularly aim to undermine the capability of AI by appealing to something special and innate in us. We don’t just predict the next word, we truly understand creativity and reasoning. These models are just a poor facsimile of our genuine intelligence.

They are dumb for now, sure, but whether or not they “truly” understand something is irrelevant. If you can prompt an AI to do a week long task, and it takes a day to do it, will it matter at all if there are no lights on inside? Will your boss think “Hmmm, it would certainly be convenient to ask GPT-6 to do this, but it doesn’t truly understand Excel, so I’ll pay a human to do it 7x slower instead”? Imagining that AI isn’t going to transform the world because it’s just predicting what we want to hear, or what lines of reasoning we want it to take, is like thinking a replica sword can’t hurt you. If it’s sharp and gets the job done, it doesn’t matter if it’s an authentic Viking artefact, or made by a Youtuber in his workshop. Is there something special about our intelligence that a machine can never replicate? Maybe! Is there something special about what we actually do that machines can’t replicate? No, and that’s becoming clearer as the months go by.

There are plenty of reasons to be critical of AI right now. I hate that we’re now in another arms race. I hate that safety testing has essentially been abandoned. I hate that the future of the world, and potentially much more than that, will probably be decided by a few tech CEOs in Silicon Valley and *gags* Donald Trump (seriously, a man with a Comedy Central roast will likely be the most consequential president in history!). However, acting as though AI is just a fad because it can’t replicate what we do is not only delusional, it’s dangerous. It’s failing to take humanity’s last invention seriously because we’re too insecure to consider that we might not be special. That is not a a winning strategy, and it’s time ordinary people took things seriously.

Mate, I think so many people are still sleeping on the latent capability of AI (even as it is now), but I don't mean that in an AI fanboy way.

I currently lecture at a tertiary institution, and pretty much every single student (and most staff) use AI in some way. But the problem is that the vast majority of them use it in an offhand, surface way, which I think is a huge issue at a macro level.

Lecturers use AI to generate content, students use AI to generate assessments, and everyone's acting like the whole process of learning is still genuine, when it feels more like a farce. I ask students questions in class and they don't just give me an answer, they use AI to give me a generic answer, bypassing their brains and critical thinking in the process.

To your point about reasoning, people are ALREADY outsourcing their reasoning to AI models.

So what I've started doing is teaching students how to think and engage whilst using AI. In that, it strikes me as deeply ironic that whilst there's a group of tech boffins teaching AI to better reason, perhaps we need to be even more aware of teaching humans how to better reason as well.