I have some questions for my readers! It’s a short, anonymous form with a couple of questions about some future ideas. I also have some optional demographic questions in there purely out of curiosity. Please fill it in if you have the chance!

I was at a wedding this past weekend and naturally AI came up. I'd be surprised if it hadn't, really. It's been the hot topic for a while and people pretending to be grown ups in ties and nice dresses love talking about hot topics. I bet every wedding in 2007 had similar conversations about how "everything is different now" and "kids these days are becoming too reliant on socialising at the Facebook" or whatever.

The people I spent the weekend with were mostly academics, and I have since learned that academics really hate AI. On reflection, it's expected. Marking GPT's work instead of your students probably sucks a lot of the joy out of teaching. I, however, am part of the "AI has its risks, but if done well it will make our lives unimaginably better" club, so was woefully outnumbered by my fellow party goers. I was also woefully out-prosed because everyone else had a PhD and I still get excited when I find a good stick.

So I sat back and listened quietly while the usual talking points came up. Someone would mention their worries around plagiarism, the slop-ification of the internet, or the atrophy of young minds, and everyone else would nod along politely. Maybe one or two would sheepishly admit to using it for very specific use cases, but qualify that it's a rare indulgence rather than a part of their routine. I only started to show my hand when everyone concurred that AI’s environmental impact was untenable. I can keep quiet when someone complains about Studio Ghibli slop, but critiquing AI's water use with meat in hand will not do! Weirdly, when I pointed out that a kilo of cheese uses the same amount of water as hundreds of thousands of prompts, the dairy industry was spared the same heat GPT had been getting all evening (probably because cheese is yummy, and eating slightly less yummy food is totally unthinkable).

The conversation culminated in a final flurry that I feel reflects a growing division among us. One man put his glass down, and unleashed a rant I suspect he'd been holding in since the start of the conversation. To paraphrase heavily, it was something along the lines of:

"Yes, I agree with all these criticisms but my main problem with AI is that it's shit! Whenever I use it, it's shit! I can always tell when my students use it because it's shit! Honestly, how anyone thinks it can cause serious job losses is beyond me - because it’s really shit!"

Not his exact words, but that was the gist. He did say shit a lot.

This left me bewildered - what AI has he been using?? Modern models are very much not shit. In fact, they're already incredible and getting better by the day. All of us can make basic code now, and if you're good at prompting, you can make programs that are quite sophisticated. I totally get how someone can have vastly different views on the net value of AI, but how is it possible for us to have such different views on the capability of it? We all go to the same URLs and use the same apps, no?

I think there's two main reasons. The first is best exemplified by this sentence.

"All toupees are awful! I've never seen one that I couldn't tell was a toupee!"

Of course, while I also feel this way, anyone that says this is missing an obvious blind spot. If a toupee were good, you wouldn't notice it, and so the only dataset you have are the noticeable ones. As being noticeable is what makes a toupee bad, this clearly skews your results.

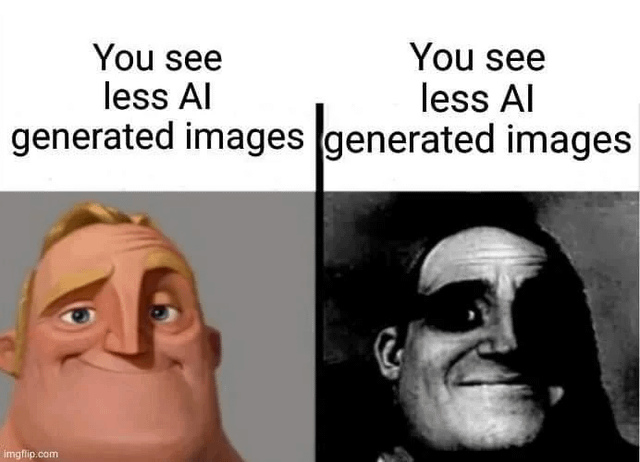

I wager something similar happens with AI. When AI works, you don't notice. If it replaces us well, that means that it's hard to distinguish its output from a person’s. If it does it poorly, it’s very easy to spot. I bet the people that think all AI output is crap are just not noticing the numerous AI successes.

The second reason for our differing views on AI capability became apparent when he told us he barely used AI. I imagine this was ideologically motivated for the most part, but it sounds like he's had the same experience a lot of people have - he tried to use it a few times, got crap outputs, and so doesn't see the value in it. Understandable. After all, if there was a search engine that always got confused and took me to a picture of a young Christian Bale, I bet I wouldn't use it very often either (unless it was my birthday).

The problem is, this becomes a bit of a feedback loop. You don't use AI very often because your outputs are shit, but I'm afraid your outputs are shit because you're not using it very often. We're at that funny transitional stage where some people have grokked how to use the new tool while others haven't yet. It's like when you watch your dad try to search for flights and he googles "Where can I get a plane to Tenerife please?" instead of "flights London to Tenerife". There are people who still feel like Google is crap because they don't know how to condense their queries into relevant keywords.

Anyone with experience knows AI is the same. Just this last week my colleague complained that GPT couldn't complete some task. However, when I asked him what model he was using, he said "I dunno, I think 4? That's the default, right?". We then tried again with a reasoning model and wrote step-by-step instructions with examples of output - and y'know what? It turned a tedious hour-long task into a 3 minute one. As the kids say, skill issue.

*clicks drop down to Gemini 2.5 Pro*

If you think AI is shit, this sort of thing should concern you. Abstaining from AI use because you think it doesn't work is the modern-day equivalent of persisting with fax because you can't work email out. You're fine for now - but you will be left behind if you can't adapt. What we have is an ever widening gap between those that get value from AI and those that don't. Each side having their position cemented by their regular practice, or lack thereof.

It makes me think that the inevitable job losses from AI agents are going to hit some people like a ton of bricks. If your only experience is badly prompting GPT-4o, it’s hard to see how AI could ever do what you do for work. But the time horizons are lengthening, and the skillset is expanding - strikes me as smart to familiarise ourselves with the paradigm shift instead of keeping it at arm’s length until it’s too late.

AIiiiiiyyyyyy!!!!

Our most recent post is about how AI could play out even if it never gets better and a big unknown that makes predicting tough is its lagged and uneven uptake. "All of this is going to be distorted for a while as talented, high-agency people ignore the tech, and other, less talented people stumble across it earlier because they’re into crypto or something."