This year has been a golden age for AI slurs. “Clankers”, “Tinskins”, “Wirebacks” - there really is no limit to human creativity. While some think AIs can take over the world, we can be certain they’ll never surpass our ability to invent new epithets. After all, we have millennia of experience, and show no signs of slowing down.

We haven’t even met aliens yet! That’s when we’ll really hit our stride.

I’ll concede, despite generally being optimistic about AI, I think most of these new words are quite funny. Especially “Clanker”, which seems tailormade to be yelled while ramming a frag grenade into a robot’s jaws, and pulling the pin. However, I can’t help but feel like it’s a bit dangerous getting so used to hating on AIs, and seeing them as morally worthless.

To be clear, I don’t mean it’s dangerous being anti-AI in general. Maybe there are legitimate reasons to be opposed to the proliferation of AI. When you consider the sloppification of the internet, the death of social mobility, or existential risk, it’s not ludicrous to want to stop all this change. I don’t assume someone is making a mistake by being anti-AI, because the topic is so consequential and complex it’s hard to know the right view (unless they complain about water use while eating cheese, in which case my eyes glaze over pretty quickly).

However, there’s been a recent turn from being anti-AI at large to hating AIs themselves. Not simply opposing the deployment of AI, but specifically spitting on the likes of GPT-5, Claude, or Figure Robots. It’s as if we can tell there’s a world of intelligent robots coming soon, and we’re laying the groundwork to make them second-class citizens. To other them, place ourselves above them, and ultimately justify their mistreatment.

Now, my guess is that would probably be fine because they aren’t living beings. I certainly don’t think anyone saying “Clanker” today is hurting the feelings of ChatGPT. However, down the road who knows if the lights will switch on inside them or not? It’s not as if we have a good enough understanding of consciousness to totally rule out the idea that a sufficiently advanced AI will gain a subjective experience. It would certainly be weird if you can make consciousness from sand and metal, but it’s weird that you can make it from carbon and water - and yet here we are!

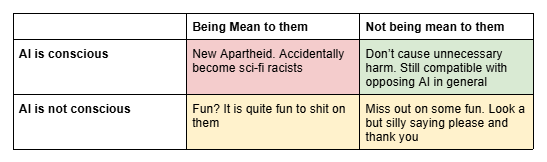

This isn’t to say I’m convinced robots will be conscious in the future (my guess is they probably won’t), but the uncertainty is enough to get into a habit of treating them with respect - even if you think the chance they’re aware is as low as 1%. If we’re nice, the worst-case scenario is we spend some time being polite to robots that aren’t conscious and look a bit silly. However, if they do end up conscious, the worst-case scenario is we accidentally build a new apartheid state, and all become racists toward what are a new group of thinking, feeling, people. That seems like the sort of thing we’d like to avoid! When risk is so asymmetrical like that, the choice becomes pretty straightforward.

Bottom-left is not worth risking top-left for, right?

I should say, you can still be anti-AI and believe all this. In fact, it’s not clear why the AI themselves are deserving of your hate anyway. Surely you should just be mad at the people that made them? They didn’t choose to get made, or be used for all the things you hate about AI. If you’re anti-AI, chances are you’d consider even conscious robots devoid of free will - but if that’s the case, how can you hold them as personally responsible? It might be that it’s best for the world to prevent their invention, or accelerate their decommissioning, but it’s not clear why either of those would require being cruel to them as you do so. Much like how putting down a dangerous animal wouldn’t require hating a Gorilla that’s tearing through Disneyland Paris. You can just intervene, and even recognise there’s something regrettable about it. After all, it would be quite sad if we created a new species of life, but had to decommission it for our own safety. It’d be even sadder if we debased ourselves by insulting them and rejoicing as we did so.

I’ll also say, it’s just a bad habit to become hateful in general. You’re unlikely to become the best version of yourself if you give in to the base human desire of othering some demographic, even if it turns out you’re just roleplaying bigotry with dead machines. We’re also less likely to make the best decisions regarding AI if our thinking is polluted by robophobia (yes, I also think that word sounds silly in 2025, but I suppose it’s the correct one). We may find ourselves downplaying the benefits of AI, or feel unwilling to accept potential evidence of their consciousness, if we spend 5 years seeing them as bottom-feeding scum. This issue is exceptionally important, and we need to maximise our ability to give it a level-headed assessment.

So, you’ll still catch me saying please and thank you to GPT. If I see robots walking down the street in a few years, I’m not going to insult them as I walk past. There’s so much to lose if it turns out there’s someone in there, and nothing to gain by being hateful - except the way the slurs roll off the tongue, I suppose. Guess I’ll call them “Robotically inclined person” if I ever need to blow a hole through their chest with a 12-gauge? Seems like the polite thing to do.

I just think people should try to be kind in general. Have a kind, compassionate mindset. IMO.